Urlan, Cosmic Cat: The Making Of - Part 2

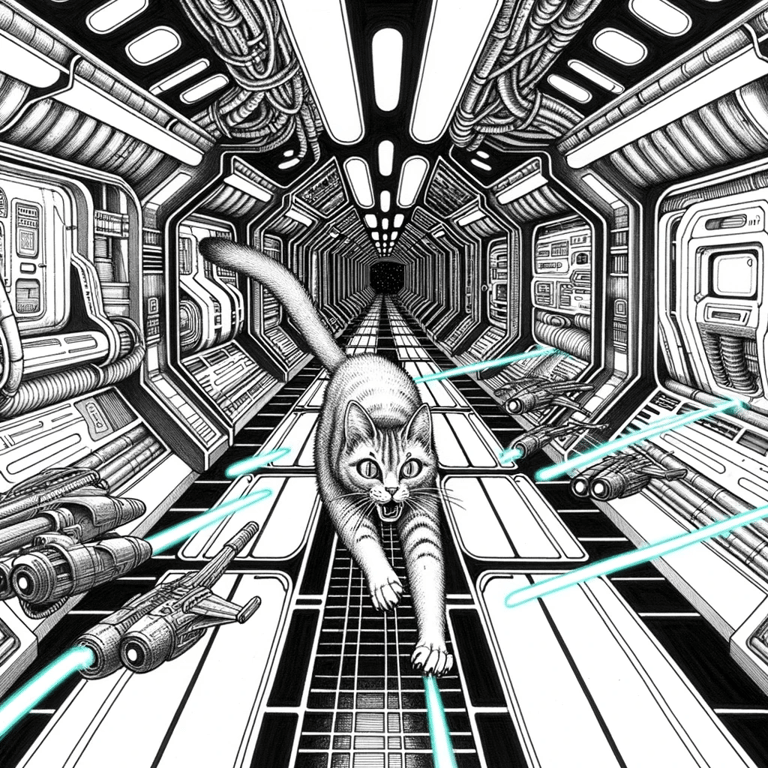

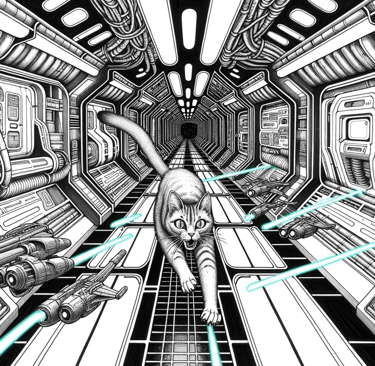

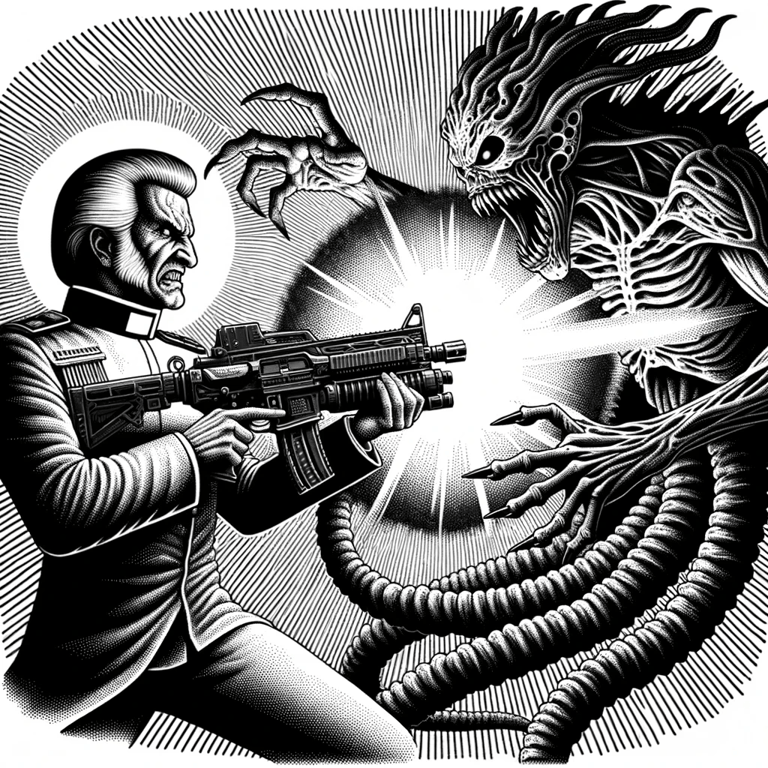

Because when you have cats, big guns, spaceships and tentacular demons, what can go wrong?

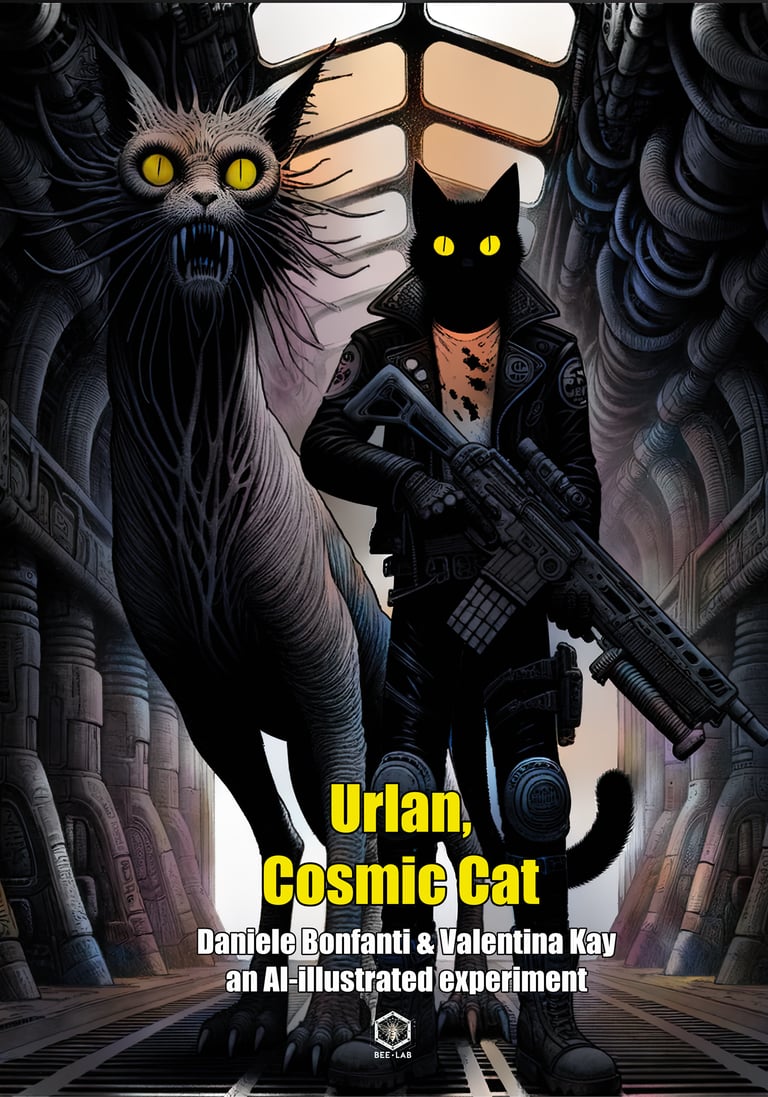

URLAN, COSMIC CAT

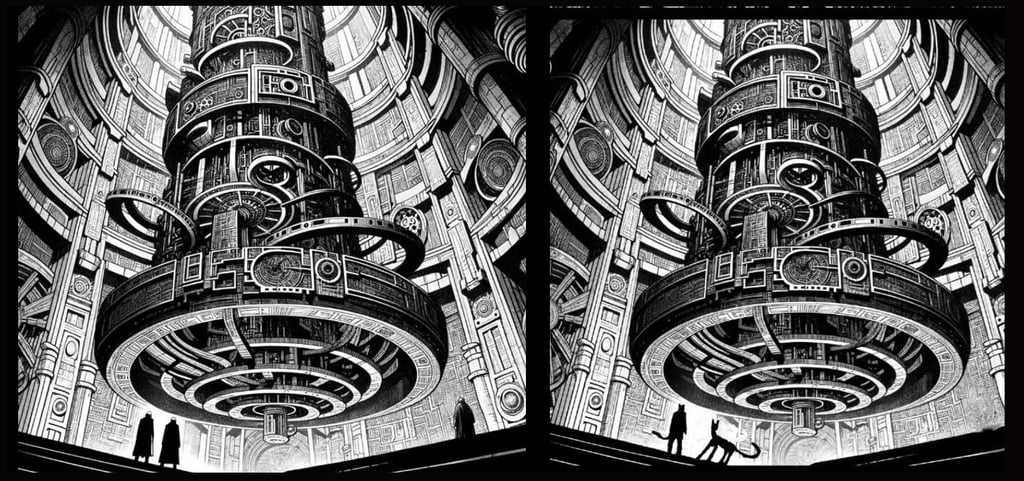

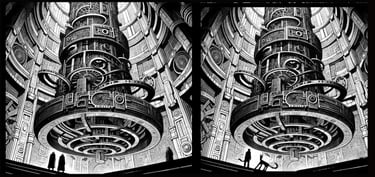

This article is part of the "Making Of" series about "Urlan, Cosmic Cat" and features some of the weirdest, funniest and/or more interesting unused images generated in the rollercoaster process of creating this incredible AI-illustrated graphic novel.

You can check out a free preview of the actual work, grab it on Amazon or watch the Launch Trailer.

Heads up:

this article contains some spoilers!

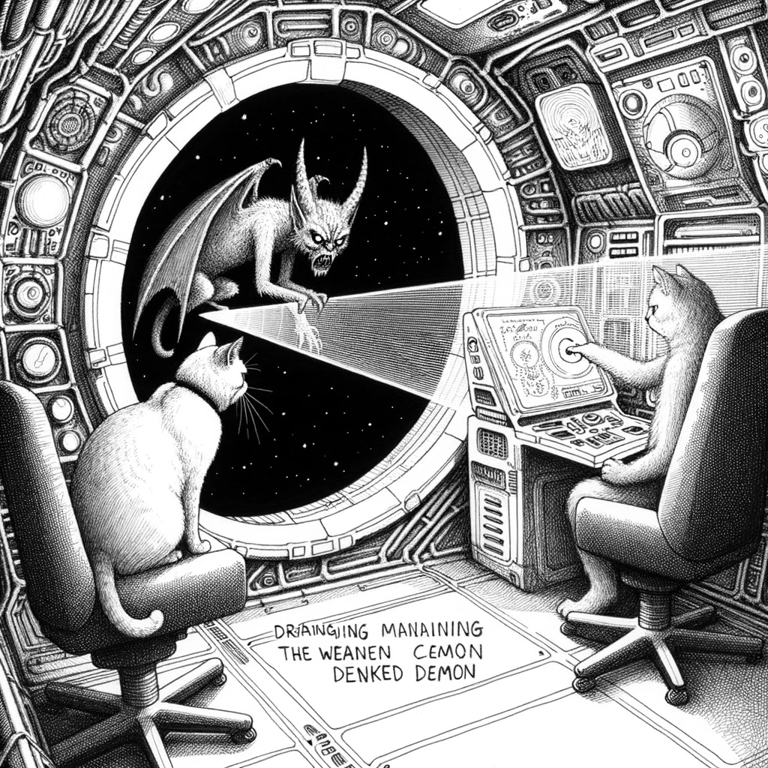

We insisted on studies of the characters and the scenario – “feeding” and training the AI in a way similar a human artist would do, after all – and then we went on to the first scene. Good panels began to come out and the story to take shape, among lots of stuff we had to discard – you’re seeing some of the funniest in these pages.

From our previous experiences, we decided that we had to work in a very different way than you may with a human artist.

We couldn’t start from a whole script and feed it to the AI. We had to do something more akin to a jam session. We had to go along with what came out, discovering ourselves what would happen. We had to interpret things in the art and turn them into narrative ideas.

It was also immediately decided that the story had to be tongue-in-cheek. A serious atmosphere or heavy themes would be completely broken by inconsistencies, while they could become an asset in an ironic narrative.

We might also use techniques and workarounds such as describing Urlan weapon as “an AK47” instead of “a futuristic assault rifle”: that would ensure consistency.

For Urlan’s weapon, though, we chose another approach – though we used similar prompting techniques for other aspects.

In fact, we think the whole thing took the right path when we decided to turn the main weakness of the technology into a narrative strength. Okay, so, we have inconsistencies: we may choose to drop the project or we may embrace and ride the weirdness. After all, Urlan was recognizable. So, is perfect consistency even necessary for a great comic?

The question sounded blasphemous to our editor-hardwired minds. And yet, as we looked at the art taking form, we could see a story unfolding. Why shouldn’t the reader see it, too?

What if… What if those inconsistencies were there for a reason? We’re the writers, right? Maybe our job is motivating them.

And so Urlan’s rifle became able to reconfigure itself, its jacket to project holos to camouflage as a normal piece of outfit – but it’s twitchy because it took a couple bullets too many. The demon became a shapeshifter and the ship a temple ship with a thousand doors opening on different places to find God. None of this was in the original script – Hell, there was no original script. Everything was emergent narrative from the art as it kept being cooked up by ChatGPT/Dall-E. The whole process became something chaotic but an order arose. It was about making a bunch of images in ChatGPT; stop as it began drifting. Then looking at them together, and taking a long walk to the beach or through the lava fields outside our house, talking and talking and talking and trying to solve a mystery, make sense, give sense, and then when that was done – oh, there were powerful epiphanies! – try and imagine what would happen next.

Then feed it to the AI in the next session, rinse and repeat.

A very effective technique we used was “asking” the AI to describe the images it just produced, discussing with it what worked and what didn’t in it, so to keep building better and more coherent prompts as the story continued.

Of course, there were inconsistencies which were just too absurd, so it took a lot of trial and error with the AI and only an image out of many was usable. Often, only a part of it was usable – it incorporated, for example, a perfect Urlan and a version of T.D. which wasn’t good at all, or vice versa. That was not a big problem, as we could cut, mask them while laying out the comic – even cover it with the lettering, why not.

Were we aiming for “finished”, ready-to-print panels out of ChatGPT, the whole thing would be impossible.

We also soon understood that a lot of image editing would be necessary in post-production. For example, no matter how hard we tried, ChatGPT kept drawing Urlan wearing a tie. Not in every panel, but many, many great panels had him with a tie!

Un-tying Urlan was impossible sometimes – ChatGPT/Dall-E 3 can’t just redo the same image with little corrections. That’s something Midjourney and Adobe Firefly are better at thanks to their “inpainting” capabilities – meaning you can select part of an existing image and give a prompt to redraw only that part, keeping the rest intact. Dall-E also have something similar (although limited) but it’s not integrated into ChatGPT, so we would have to export the images, load them up in Dall-E and work on them there. Also, it’s not the same model, so results would be not great (we tried, for research sake… nothing usable came out) and it has a cost – and one of the main tenets of our project was to showcase what could be done with ChatGPT+Dall-E 3 only, and with little investment.

Well, only wasn’t possible, as a couple other pieces of software came to help: GigaPixel AI was used to upscale the images to a resolution fitting for a printed book, as ChatGPT can give you good quality png images, but too small for anything bigger than a smaller panel. For big panels, splashes and spreads, upscaling was a must. GigaPixel AI costs 99$ and it’s a one-off. We thought it could fit very well in our second goal (showcase what these robots can do). In fact, Gigapixel does an incredible job of enlarging images with perfect details.

And then Clip Studio Paint EX for the layout, editing and lettering. Clip Studio Paint EX is a one-off, lifetime licence for about 220$, which we already owned and use. You may also do the lettering in Inkscape, which is free, and there are free alternatives to Clip Studio Paint which can do (almost) everything we did for Urlan. We discovered that, although CSP does vectors, that’s not at all on-par with Affinity or Illustrator. Anyway, it’s conveniently all-in-one, and we had to respect the “little investment” and “quick” rule. Consider the ChatGPT licence costs 20$ a month, and it took less than a month creating Urlan. You get the idea of the total costs.

Original image vs final panel: The AI often managed to do the yellow eyes, but not always. We tried to ask for lettered panels, at first, but none of it was usable (though not terrible). If you look closely, you’ll see Urlan has an extra finger in the original image. And, of course, the tie!

Manual editing is, of course, a skill you need to have (or willing to learn) if you plan to do a comic with AI – at least with current technology. We didn’t do anything too difficult, but we had to erase ties, color yellow eyes, cut panels, erase strange “intruders”, correct misshapen hands and tails (Urlan often came out sporting two tails, or missing one) and so on.

Original image vs final panel: Sometimes, the AI gave us images with random guys in them, but just too beautiful not to use them in the story. So here and there we had to draw in tails, ears and paws… or just, you know, liquify someone out of existence.

Original cover illustration vs final image: the art we chose for the cover was very good. Unfortunately, our heroes’ tails were mixed up and T.D. lacked a rear paw!

We also used Clip Studio Paint “Colorize” technology to color the cover – a process which sounded perfectly fitting with the whole “showcase what these robots can do” goal, and took about ten seconds. The result was honestly stunning (we only had to recolor Urlan’s eyes manually as they’d come out a dull shade).

Anyway, before the whole GigaPixel and CSP editing and layout phase, let’s take a step back.

(to be continued!)

Urlan, Cosmic Cat

A groundbreaking AI-human collaboration in graphic storytelling. With yellow eyes.